(xe2,ye2)Recall from the introduction that the area $$A = \int_{a}^{b} p(x)\ dx \approx (b-a) \ \frac{1}{n} \sum_{i=1}^{n} p(X_i),$$ where $X_1, X_2,...,X_n$ is an uniform $U[a,b]$ distribution sample.

Instead of using it to estimate $\pi$ or the area of polygon, a more general usage is to estimate the complicated integral.[19] Another well-known method to approximate an integral is by Simpson's rule, which was first introduced in A-level study before. The formula is given by the following:$$A = \int_{a}^{b} f(x)\ dx \approx \frac{\Delta{x}}{3}(f(x_0) + 4f(x_1) + 2f(x_2) + 4f(x_3) + 2f(x_4) + ... + 4f(x_{n-1})+f(x_n)),$$ where $n$ is positive even, $\Delta{x} = \frac{b-a}{n}$, $x_i = a + i\Delta{x}$ and $i = 0,1,...,n$.[13]

In R, we can use the following function to compute the integration by Simpson's rule:

simpsons_rule <- function(f,a,b,n=10000) {

if (n <= 0 || n %% 2 != 0) return("Please enter positive even number n")

if (!is.function(f)) return("Please enter function f")

if (a == Inf) a <- 1e4 else if (a == -Inf) a <- -1e4 else if (b == Inf) b <- 1e4 else if (b == -Inf) b <- -1e4

delta_x <- (b - a)/n

xi <- seq.int(a,b,length.out = n + 1)[-1][-n]

odd <- seq.int(1,n - 1,2)

if (n > 2) {

even <- seq.int(2,n - 2,2)

approx <- delta_x/3*(f(a) + 4*sum(f(xi[odd])) + 2*sum(f(xi[even])) + f(b))

} else {

approx <- delta_x/3*(f(a) + 4*sum(f(xi[odd])) + f(b))

}

return(approx)

}Example 5.1 Estimate $\int_{0}^{1} e^{-x^2} \ dx$ by Monte Carlo method and by Simpson's rule.

By Monte Carlo method:

n <- 10000

x <- runif(n)

a <- mean(exp(-x^2))

a## [1] 0.7478853By Simpson's rule:

fx <- function(x) exp(-x^2)

simpsons_rule(fx,0,1,10)## [1] 0.7468249Example 5.2 Estimate the length of the curve $y = \ln{x}$ over the interval $[1,4]$.

Arc length of a curve over $[a,b]$ is $\int_{a}^{b} \sqrt{1 + (\frac{dy}{dx})^2} \ dx$, which is $\int_{1}^{4} \sqrt{1 + \frac{1}{x^2}} \ dx$ in this case. The exact integral is not easy to be determined, but we can still use the Monte Carlo method or Simpson's rule to estimate it easily.

n <- 10000

x <- runif(n,1,4)

a <- 3*mean(sqrt(1+x^-2))

a## [1] 3.344581The exact integral is $3.342800$ to 6 decimal places, or $\sqrt{17} - \sqrt{2} + \frac{1}{2} \ln \left(18 \sqrt{2}-3 \sqrt{17}-2 \sqrt{34}+27\right)-\frac{3}{2}\ln{2}$.

Notice that the variance of area depends on the variance of the estimator, which is $p(X)$ in this case. Then if we can reduce the variance of the estimator, the estimation will be more accurate. One important technique to reduce variance, is the antithetic variates method.[14]

Instead of the original method, Monte Carlo method with antithetic variables is to set up the second variable that depends on the original variable, which the variance of the variables sum is less than the variance of the original variable.[19]

One of the basic properties of variance is $\text{Var}(X + Y) = \text{Var}(X) + \text{Var}(Y) + 2\text{Cov}(X,Y)$, which implies that if the covariance of $X$ and $Y$ is negative, then the variance of $X+Y$ is less than the sum of their variance. If we can reduce the variance, then the estimation will be improved.

For example, if $Y = 1 - X$, then $$\text{Cov}(X, 1-X) = E\left(X(1-X)\right) - E(X)E(1-X) = E(X) - E(X^2) - E(X)\left(1-E(X)\right) = - \text{Var}(X),$$ which is a negative value, as the variance is positive (or zero if given constant values).

But when we are using Monte Carlo method, we will need to consider $\text{Cov}\left(p(X),p(1-X)\right)$, so we can't easily deduce the sign of the covariance. But think about the monotonicity of the function, if a function $p$ is not monotonic over a region, for example $p(x) = \cos{x}$ over $[-1,1]$, then let $Y = X$ and $p(X) = p(Y)$, which implies that we just repeat the original method again. In simple, if $p$ is monotonic, then $\text{Cov}(p(X),p(Y))$ is negative.

Example 5.3 Estimate $\int_{0}^{1} e^{-x^2} \ dx$ again. Notice that the function $e^{-x^2}$ is monotonic decreasing over $[0,1]$, then a Monte Carlo method with antithetic variables is $$\frac{1}{n} \sum_{i=1}^{n} \left(\frac{1}{2}e^{-X_i^2} + \frac{1}{2}e^{-(1-X_i)^2}\right),$$ and the code looks like this:

n <- 10000

x <- runif(n,0,1)

y <- 1 - x

a <- mean(exp(-x^2)/2 + exp(-y^2)/2)

a## [1] 0.7464516The difference between the original and arithetic is not obvious if only run once, so let's test it more:

n <- 10000

a1 <- c()

a2 <- c()

for (i in 1:10) {

x <- runif(n,0,1)

y <- 1 - x

a1 <- c(a1,mean(exp(-x^2)))

a2 <- c(a2,mean(exp(-x^2)/2 + exp(-y^2)/2))

}

data.frame(Original=(a1-0.746824)*1000,Antithetic=(a2-0.746824)*1000)## Original Antithetic

## 1 -1.272708 0.13550731

## 2 0.215526 0.16875908

## 3 -1.066277 -0.35460663

## 4 -1.525344 0.07371390

## 5 -1.148357 -0.25150037

## 6 1.637443 0.30984076

## 7 1.331888 0.35226570

## 8 2.680033 0.27922990

## 9 -2.010939 -0.06512753

## 10 0.576300 0.06304276The exact integral is $0.746824$ to 6 decimal places, and we 1000 times the difference between the estimated value and the exact value. It is obvious that, the antithetic method is an improvement to the original method, as the variance is reduced.

Also, if we uniformly generate $X$ over $[a,b]$, then $Y = a + b - X$ is still within the range $[a,b]$, and $\text{Cov}(X,Y)$ is negative.

Example 5.4 Estimate the length of the curve $y = \ln{x}$ over the interval $[1,4]$ with improved method.

n <- 1000000

a1 <- c()

a2 <- c()

for (i in 1:10) {

x <- runif(n,1,4)

y <- 5 - x

a1 <- c(a1,3*mean(sqrt(1+x^-2)))

a2 <- c(a2,3*mean(sqrt(1+x^-2)/2 + sqrt(1+y^-2)/2))

}

data.frame(Original=(a1-3.342800)*1000,Antithetic=(a2-3.342800)*1000)## Original Antithetic

## 1 0.16577621 -0.12269626

## 2 0.22200712 0.02078038

## 3 -0.22798658 0.08306721

## 4 -0.12435955 0.06873986

## 5 -0.39593390 -0.26677621

## 6 0.09816367 -0.11048513

## 7 0.35040366 0.03770652

## 8 -0.15715313 0.13883513

## 9 -0.18167042 -0.09729905

## 10 -0.06786056 -0.05691587Now we can write the function of Monte Carlo integration in R.

monte_carlo_integral <- function(f,a,b,n=10000,method="original") {

if (n <= 0) return("Please enter positive number n")

if (!is.function(f)) return("Please enter function f")

if (a == Inf) a <- 1e4 else if (a == -Inf) a <- -1e4 else if (b == Inf) b <- 1e4 else if (b == -Inf) b <- -1e4

x <- runif(n,a,b)

if (method == "original") {

area <- (b-a)*mean(f(x))

} else if (method == "antithetic") {

y <- a + b - x

area <- (b-a)*mean(f(x)/2 + f(y)/2)

}

return(area)

}In the case of Inf, just set a large number and evaluate it. If the integral of the given function $f$ is converge when $x$ tends to infinity, then the $f(x)$ tends to $0$. As we are estimating the integral, it won't make a huge difference whether the upper bound is $10000$ or $100000$ or infinity.

Now think about using Monte Carlo integration to estimate the area of polygon, as we can apply the antithetic method to reduce the variance, it is possible to apply the same idea to have a better estimation. However, this method only can apply to the convex and bounded concave polygon, if we want to apply the integration technique. As we can find the maximum/minimum of the bounds, and divide them into the monotonic functions.

But more generally, we can still apply the antithetic method with the statistical Monte Carlo simulation, like counting the points inside the polygon. If we generate 10000 points in both $x$ and $y$ coordinates as an uniform $X \overset{\text{i.i.d.}}{\sim} U[a,b]$ and an uniform $Y \overset{\text{i.i.d.}}{\sim} U[c,d]$ distribution sample respectively, where $a,b$ are the minimum and maximum of $x$-coordinate of the polygon, $c,d$ are the minimum and maximum of $y$-coordinate of the polygon. Then we can find other 3 pairs of $x$ and $y$, which are $(a+b-X,Y),(X,c+d-Y)$ and $(a+b-X,c+d-Y)$.

Now rewrite the area_mc_method_v3, to get the version 4:

area_mc_method_v4 <- function(x,y,k=5000, typepoly = TRUE, plotpoly=TRUE, point_method = "wind", mc_method = "original") {

if (length(x) <= 2) return("Input values are not vaild. Error: Only two vertices")

if (typepoly) {

type <- type_of_polygon_v2(x,y)

if (type == "Convex") {

print("Convex Polygon");mc_method <- "antithetic"

} else if (type == "Self-intersecting") {

print("Self-intersecting Polygon")

} else if (type == "Concave") {

print("Concave Polygon")

}

} else {

if (type_of_polygon(x,y) == "Convex") mc_method <- "antithetic"

}

count <- 0

rectangle_area <- (max(x)-min(x))*(max(y)-min(y))

random_x <- generate_k_random_point(x,y,k)$x

random_y <- generate_k_random_point(x,y,k)$y

inside <- c()

inside2 <- c()

inside3 <- c()

inside4 <- c()

if (mc_method == "original") {

for (i in 1:k) {

count <- count + point_inside_v2(x,y,random_x[i],random_y[i],point_method)

if (point_inside_v2(x,y,random_x[i],random_y[i],point_method) == 1) inside <- c(inside, i)

}

approximate_area <- count/k*rectangle_area

} else if (mc_method == "antithetic") {

random_x1 <- max(x) + min(x) - random_x

random_y1 <- max(y) + min(y) - random_y

for (i in 1:k) {

count <- count + point_inside_v2(x,y,random_x[i],random_y[i],point_method) + point_inside_v2(x,y,random_x1[i],random_y[i],point_method) + point_inside_v2(x,y,random_x[i],random_y1[i],point_method) + point_inside_v2(x,y,random_x1[i],random_y1[i],point_method)

if (point_inside_v2(x,y,random_x[i],random_y[i],point_method) == 1) inside <- c(inside, i)

if (point_inside_v2(x,y,random_x1[i],random_y[i],point_method) == 1) inside2 <- c(inside2, i)

if (point_inside_v2(x,y,random_x[i],random_y1[i],point_method) == 1) inside3 <- c(inside3, i)

if (point_inside_v2(x,y,random_x1[i],random_y1[i],point_method) == 1) inside4 <- c(inside4, i)

}

approximate_area <- count/(4*k)*rectangle_area

}

if (plotpoly) {

plot(x,y,pch="")

polygon(x,y)

polygon(c(min(x), max(x), max(x), min(x)), c(min(y), min(y), max(y), max(y)), lty = 3)

points(random_x[inside],random_y[inside], pch = ".", col = "red")

points(random_x[-inside],random_y[-inside], pch = ".")

if (mc_method == "antithetic") {

points(random_x1[inside2],random_y[inside2], pch = ".", col = "red")

points(random_x1[-inside2],random_y[-inside2], pch = ".")

points(random_x[inside3],random_y1[inside3], pch = ".", col = "red")

points(random_x[-inside3],random_y1[-inside3], pch = ".")

points(random_x1[inside4],random_y1[inside4], pch = ".", col = "red")

points(random_x1[-inside4],random_y1[-inside4], pch = ".")

}

}

return(approximate_area)

}The function above also add more fectures, makes it more general to use.

But this method is just like, reflect the points around and get the average result. For some polygons, it might even reduce the accuracy. The more complexity, the less accurate. But still, this method should work well for all convex polygons, so if the type of polygon is convex, then the function will always use the antithetic method.

Example 5.5 Estimate the area of polygons by the Monte Carlo method with antithetic variables, and compare the difference with the original method.

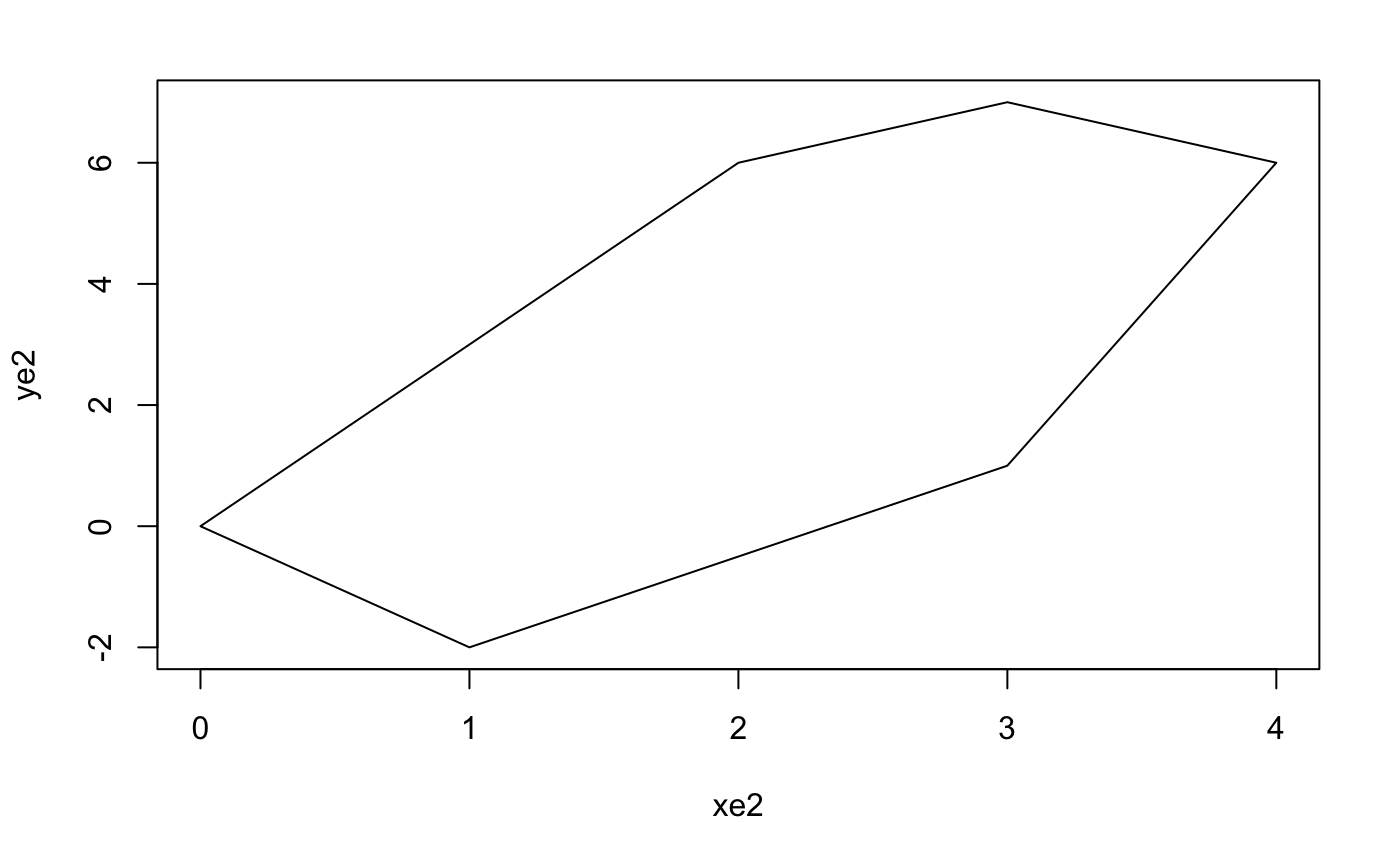

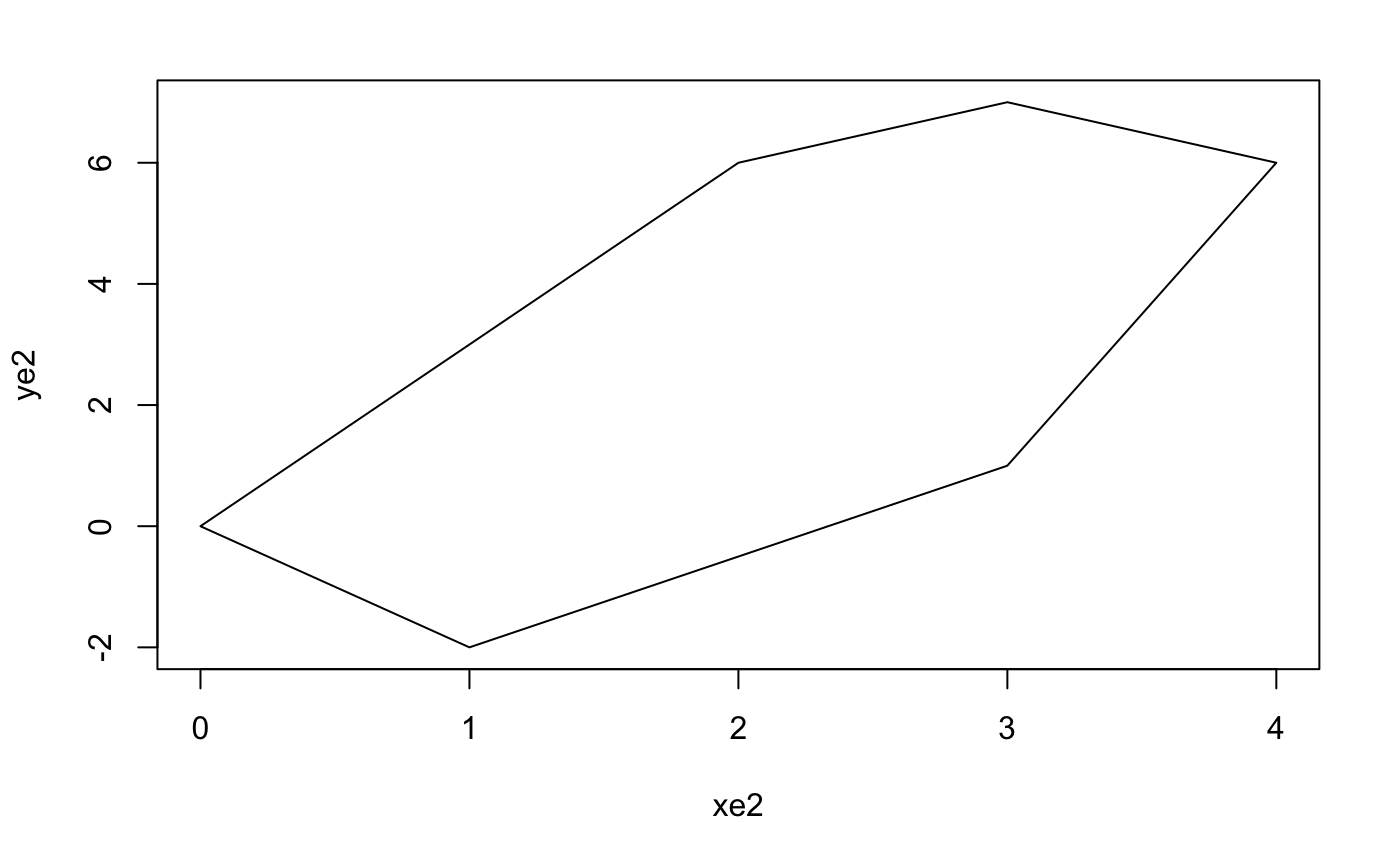

xe2 <- c(4,3,2,0,1,3)

ye2 <- c(6,7,6,0,-2,1)

plot(xe2,ye2,pch="")

polygon(xe2,ye2)

(xe2,ye2)v3 <- c()

v4 <- c()

for (i in 1:10) {

v3 <- c(v3,area_mc_method_v3(xe2,ye2,plotpoly = FALSE,typepoly = FALSE))

v4 <- c(v4,area_mc_method_v4(xe2,ye2,plotpoly = FALSE,typepoly = FALSE))

}

data.frame(Original=(v3-17.5),Antithetic=(v4-17.5))## Original Antithetic

## 1 -0.2040 0.1076

## 2 -0.1768 -0.0328

## 3 -0.2488 0.1544

## 4 0.3032 -0.0904

## 5 -0.2200 0.1004

## 6 0.3128 0.1184

## 7 0.4424 0.0230

## 8 0.1472 0.0140

## 9 -0.4000 0.1004

## 10 -0.1912 -0.1174Another approach to reduce variance, is to introduce a control variate $g$, which is a function simliar to $p$, and can be solved analytically.[15][19]

If the integral of $g$ over $[a,b]$ is $I(g)$, then the integral hence will be $$A = \int_{a}^{b} p(x)\ dx = \int_{a}^{b} \left(p(x) - g(x)\right)\ dx + \int_{a}^{b} g(x)\ dx = \int_{a}^{b} \left(p(x) - g(x)\right)\ dx + I(g).$$

As $g$ similar to $p$, the variance of $p-g$ should be smaller than $p$, then $$A \approx (b-a) \ \frac{1}{n} \sum_{i=1}^{n} \left(p(X_i)-g(X_i)\right) + I(g).$$

Example 5.6 Estimate the length of the curve $y = \ln{x}$ over the interval $[1,4]$ with improved method.

It is not hard to check that $\sqrt{1+\frac{1}{x^2}} < 1 + \frac{1}{x}$ for all $x > 0$, so we can let $g(x) =1+ \frac{1}{x}$. Then $$A \approx \frac{3}{n} \sum_{i=1}^{n} \left(\sqrt{1+\frac{1}{X^2_i}}-1-\frac{1}{X_i}\right) + \int_{1}^{4} \left(1 +\frac{1}{x}\right) \ dx = \frac{3}{n} \sum_{i=1}^{n} \left(\sqrt{1+\frac{1}{X^2_i}}-1-\frac{1}{X_i}\right) + 3 + 2\ln{2}.$$

n <- 1000000

a1 <- c()

a2 <- c()

a3 <- c()

for (i in 1:10) {

x <- runif(n,1,4)

y <- 5 - x

a1 <- c(a1,3*mean(sqrt(1+x^-2)))

a2 <- c(a2,3*mean(sqrt(1+x^-2)/2 + sqrt(1+y^-2)/2))

a3 <- c(a3,3*mean(sqrt(1+x^-2)-(1+x^-1)) + 2*log(2) + 3)

}

data.frame(Original=(a1-3.342800)*1000,Antithetic=(a2-3.342800)*1000,Control=(a3-3.342800)*1000)## Original Antithetic Control

## 1 0.21223283 0.18938566 -0.14893086

## 2 0.15050225 -0.01046107 -0.17487785

## 3 -0.11634257 0.14409480 0.11449610

## 4 -0.61327206 -0.16418989 0.34262107

## 5 -0.07992773 -0.04965741 -0.01253572

## 6 0.07871686 -0.03515993 -0.05556916

## 7 -0.01743192 0.01432607 -0.01329278

## 8 -0.01333745 0.01867702 0.02072985

## 9 0.52673886 -0.08700540 -0.21474933

## 10 0.42469550 0.03696982 -0.25150356Since it is not easy to find the $g$ just by programming, the monte_carlo_integral function won't include this method.

© Group 18